Unsupervised Pre-training of Graph Transformers on Patient Population Graphs

Chantal Pellegrini, Nassir Navab, Anees Kazi

Medical Image Analysis 89, 2023 · arXiv:2207.10603

Abstract

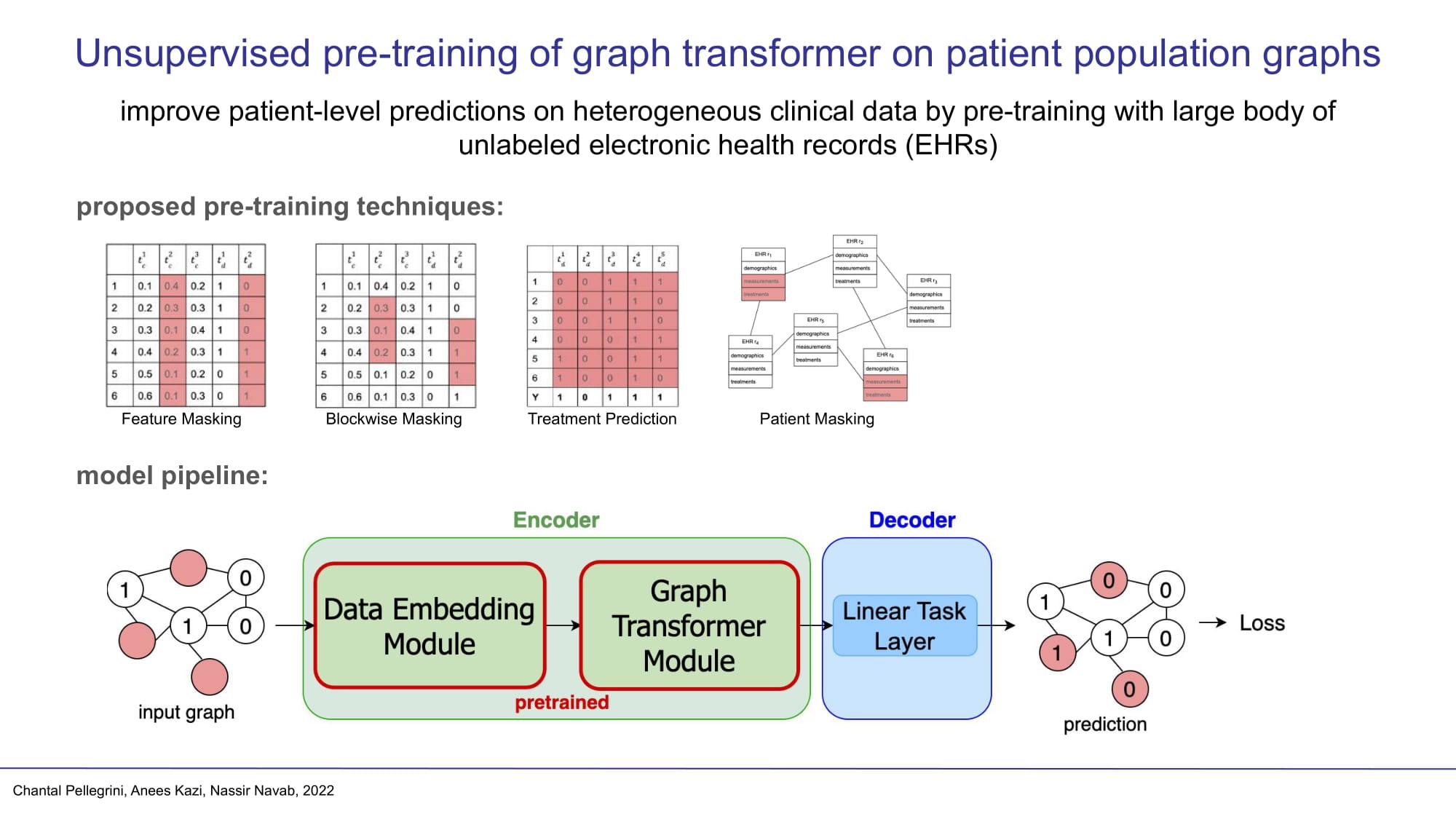

We propose novel unsupervised pre-training techniques designed for heterogeneous, multi-modal clinical data for patient outcome prediction inspired by masked language modeling (MLM), by leveraging graph deep learning over population graphs. To this end, we further propose a graph-transformer-based network, designed to handle heterogeneous clinical data. By combining masking-based pre-training with a transformer-based network, we translate the success of masking-based pre-training in other domains to heterogeneous clinical data. We show the benefit of our pre-training method in a self-supervised and a transfer learning setting, utilizing three medical datasets TADPOLE, MIMIC-III, and a Sepsis Prediction Dataset. We find that our proposed pre-training methods help in modeling the data at a patient and population level and improve performance in different fine-tuning tasks on all datasets.

Citation

@article{pellegrini2023unsupervised,

title={Unsupervised pre-training of graph transformers on patient population graphs},

author={Pellegrini, Chantal and Navab, Nassir and Kazi, Anees},

journal={Medical Image Analysis},

pages={102895},

year={2023},

publisher={Elsevier}

}