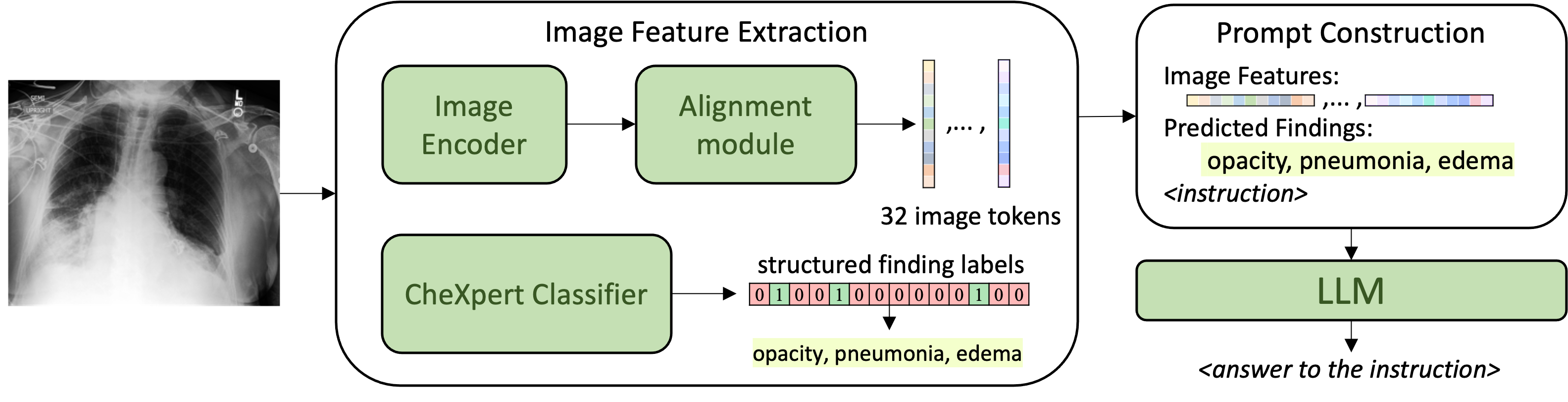

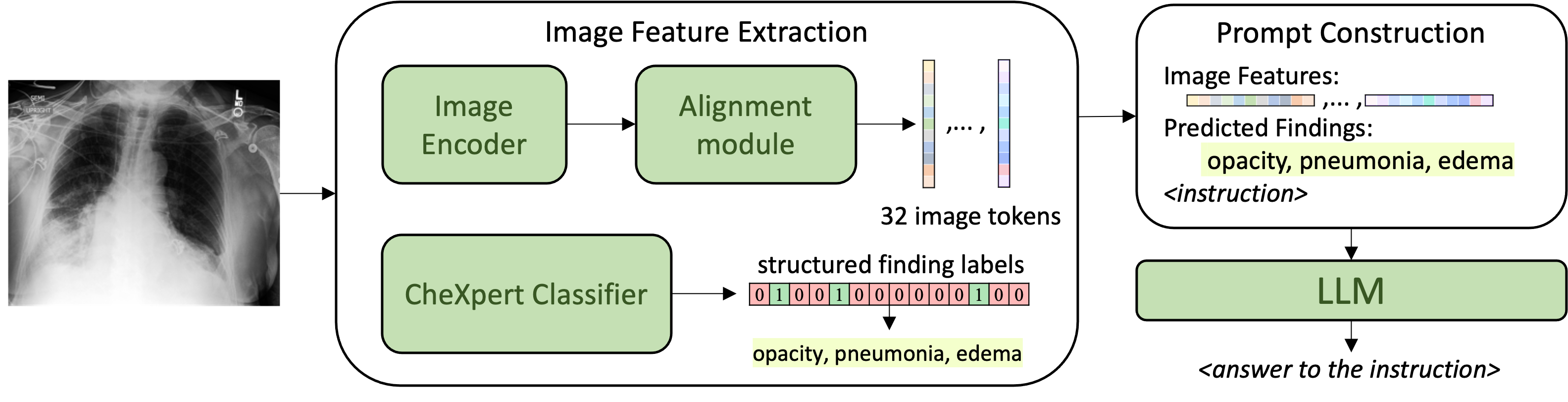

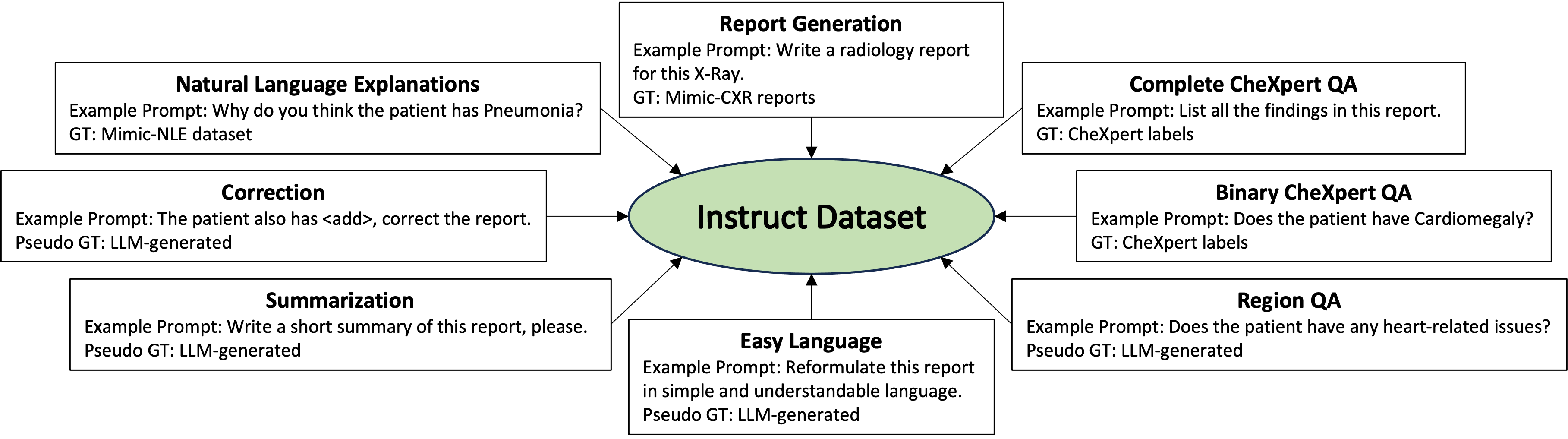

Methodology

Conversational AI tools that can generate and discuss clinically correct radiology reports for a given medical image have the potential to transform radiology. Such a human-in-the-loop radiology assistant could facilitate a collaborative diagnostic process, thus saving time and improving the quality of reports. Towards this goal, we introduce RaDialog, the first thoroughly evaluated and publicly available large vision-language model for radiology report generation and interactive dialog. RaDialog effectively integrates visual image features and structured pathology findings with a large language model (LLM) while simultaneously adapting it to a specialized domain using parameter-efficient fine-tuning. To keep the conversational abilities of the underlying LLM, we propose a comprehensive, semi-automatically labeled, image-grounded instruct dataset for chest X-ray radiology tasks. By training with this dataset, our method achieves state-of-the-art clinical correctness in report generation and shows impressive abilities in interactive tasks such as correcting reports and answering questions, serving as a foundational step toward clinical dialog systems.

@inproceedings{pellegrini2025radialog,

title={RaDialog: Large Vision-Language Models for X-Ray Reporting and Dialog-Driven Assistance},

author={Pellegrini, Chantal and {\"O}zsoy, Ege and Busam, Benjamin and Wiestler, Benedikt and Navab, Nassir and Keicher, Matthias},

booktitle={Medical Imaging with Deep Learning},

year={2025}

}